Fantastic Futures (FF24), the international conference on AI for Libraries, Archives, and Museums, was held at the National Film and Sound Archive of Australia (NFSA) in Canberra, Australia, on 15 to 18 October 2024.

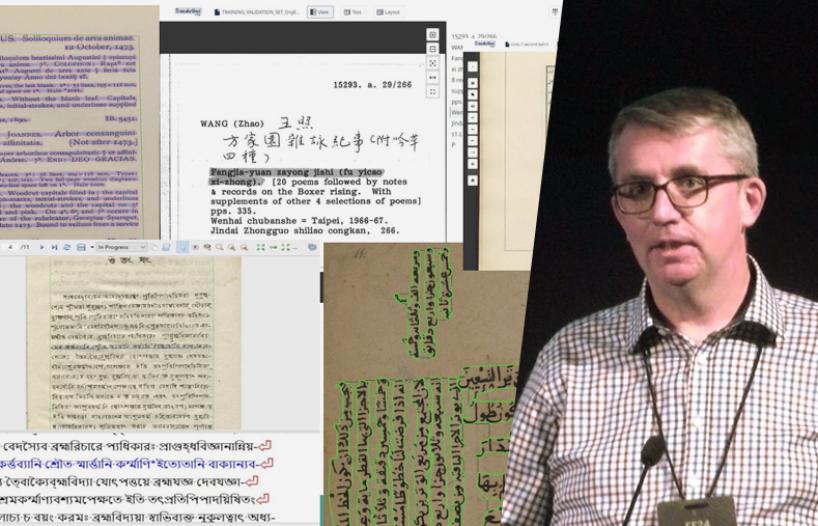

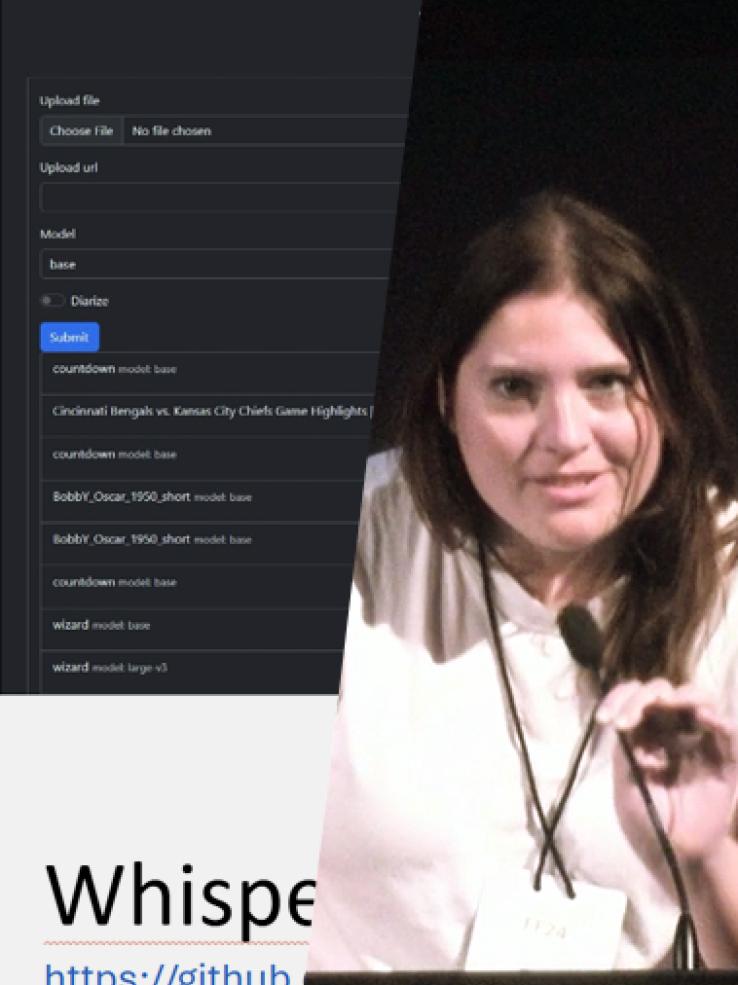

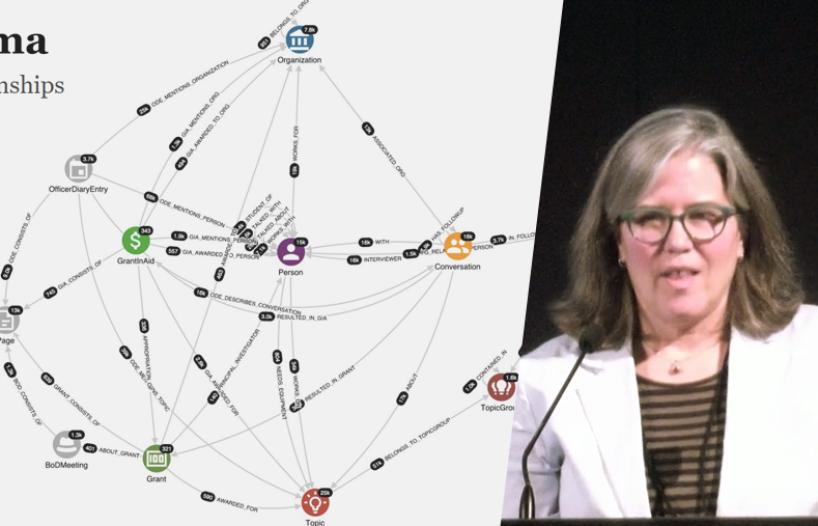

The conference included a combination of international and Australian keynote presentations, workshops, demonstrations, academic papers and creative commissions. It was enfolded by a rich on-site experience comprising two days of bespoke pre-conference workshops, a networking dinner, Canberra tours and tailored events, including unique access to the NFSA collection and a performance by award-winning Australian audiovisual artist and composer Robin Fox.

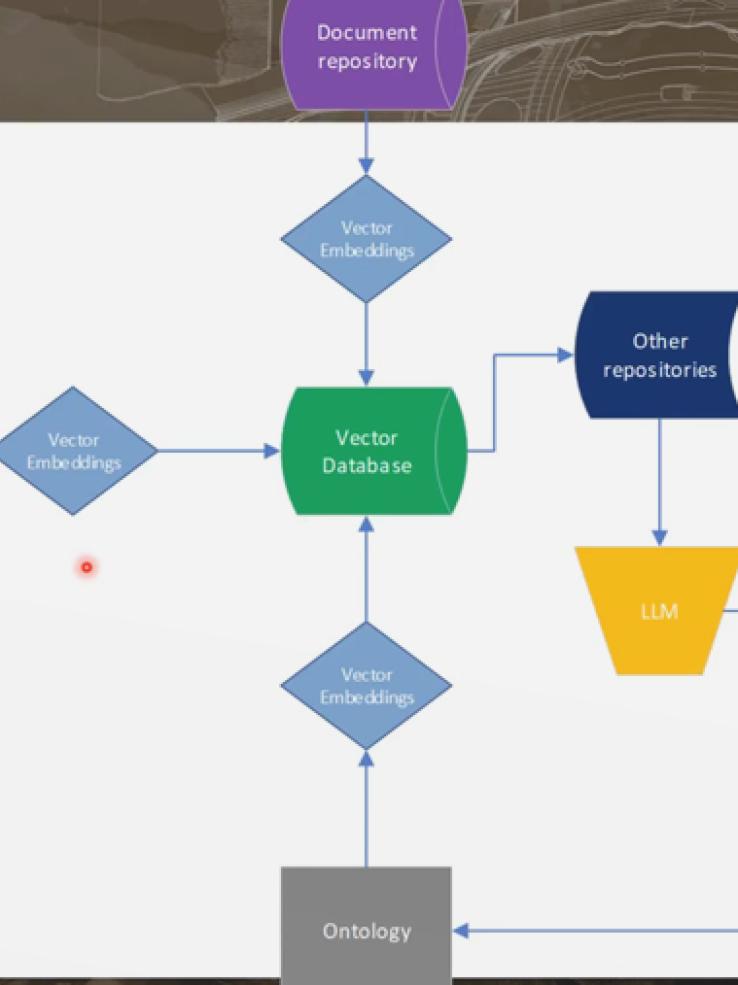

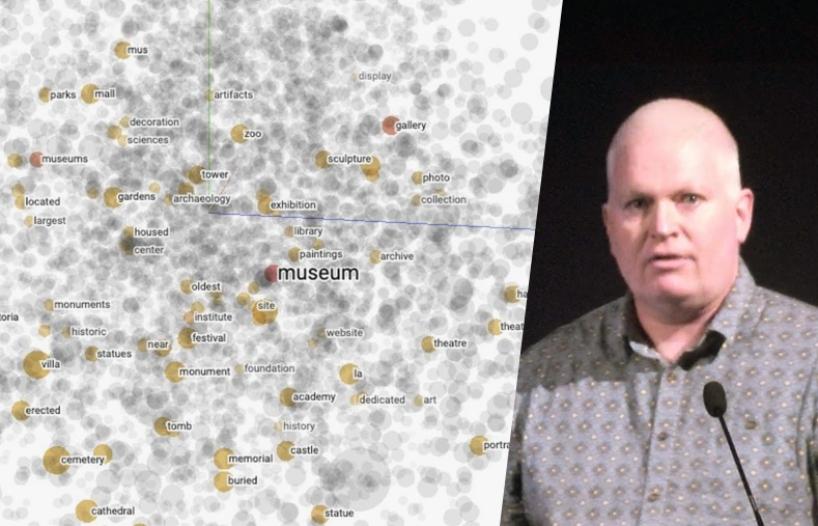

Under the 2024 conference theme of Artificial Intelligence in the Future of Work in GLAMs (Galleries, Libraries, Archives and Museums), the four-day event facilitated exploration of the current state and potential futures of artificial intelligence and generative AI within the GLAM sector, through the lenses of history, language and culture in relation to place, particularly in an Australasian context.

About AI4LAM

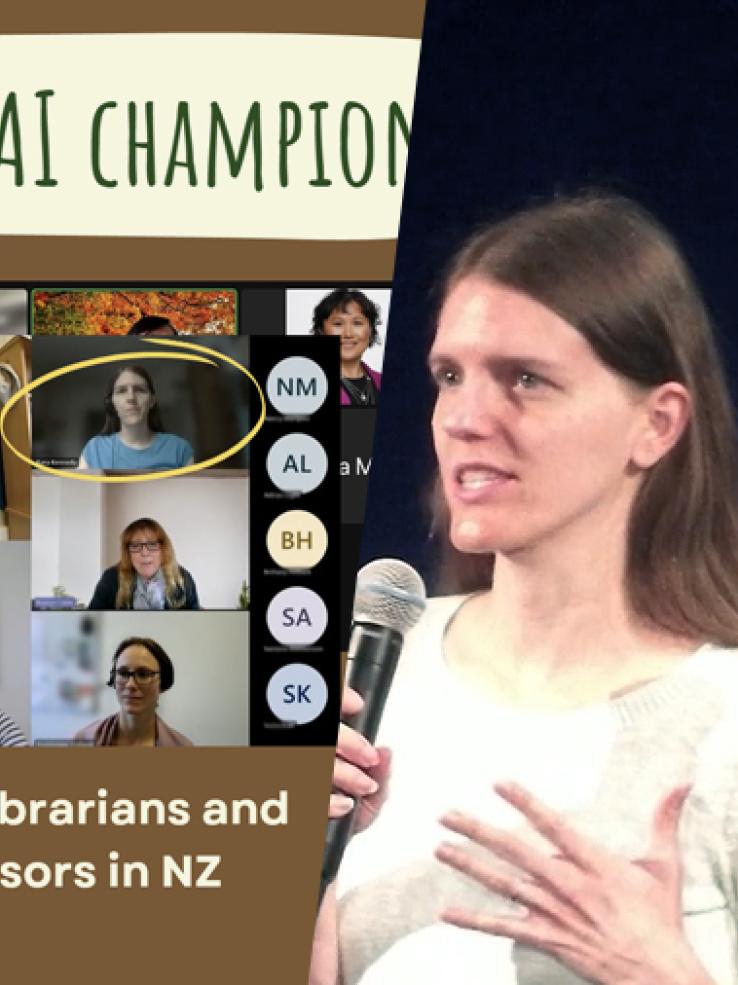

AI4LAM (Artificial Intelligence for Libraries, Archives, Museums) is a collaborative framework for libraries, archives and museums to organise, share and elevate their knowledge about and use of artificial intelligence. Individually we are slow and isolated; collectively we can go faster and farther. The Australia and Aotearoa New Zealand Regional Chapter of AI4LAM was initiated in 2020.